I hit a problem in our app Shareshot related to rendering of shadows using Core Image. Our shadows are a black image with blur applied, so the image is rgb(0,0,0) with alpha that varies from 1 for the darkest center of the shadow to 0 on the edges.

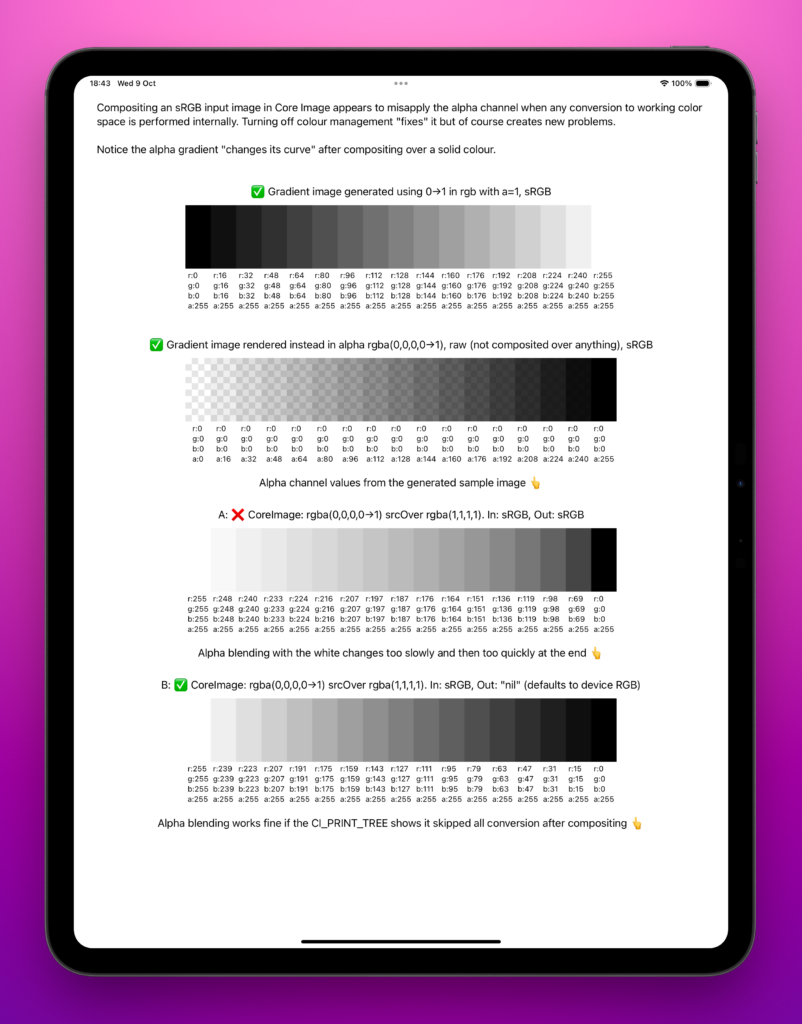

What we found is that the shadow shape looks wrong. On further investigation, simply compositing a black plus varying alpha gradient onto white in Core Image gives the wrong results. A different “curve” is applied to the alpha during compositing. This is with sRGB gradient image as input, default CIContext with its default linear working colour space, and an sRGB output.

It feels like it simply shouldn’t be this way, but … I’m probably holding it wrong, right? I’d love to hear from people about this. I’ve submitted a second feedback (FB15442718 “CoreImage appears to composite alpha incorrectly when managing colour”) after working through it previously with a DTS Engineer who confirms it looks unexpected, and this second one is listed here in full with the sample code and CI_PRINT_TREE reports. See the feedback text below for some other very confusing behaviours in CIContext.createCGImage.

I don’t understand how this could really be a bug, but I also don’t understand how this can be worked around if it isn’t a bug while keeping colour management.

Please describe the issue and what steps we can take to reproduce it:

I have encountered apparent issues when compositing images with alpha in Core Image.

I’m fully aware there’s a strong possibility I’m holding colour management wrong, but I have had a long back and forth with a DTS engineer who believes I am not holding it wrong.

Scenario:

If you pass a simple rgb(0,0,0) image that has a gradient in alpha from 0 to 1 and composite it over white rgb(1,1,1,1), the results seem incorrect out of the box. A curve is applied to the greys in the result, changing the gradient of the result.

Nominally, it seems colour management should not affect the treatment of alpha. However several strange “workarounds” for this behaviour include:

- Pass

NSNull()as theworkingColorSpacewhen constructing theCIContext. Disabling colour management completely is of course not an attractive prospect. -

Pass

nilas thecolorSpacewhen callingCIContext.createCGImage(_,from:,format:,colorSpace:). This results in aCGImagethat reports it is “Device RGB” which itself seems like it would require a colour conversion from the default linear RGBworkingColorSpace— but according to the generated program pdf (all attached) it is only converted to linear internally and not converted back.

I have attached a project that demonstrates all this using CoreGraphics/UIKit to generate the gradient, and then composites it over white using CoreImage. It samples the resulting image to inspect the actual pixel values to help debug the issue.

You can see that the fall-off to black as you move from left to right in the gradient is too slow and then too fast vs. the normal compositing that occurs if you show this gradient over white in a UI (which all takes place in device RGB presumably, after conversion from sRGB to device RGB of the input gradient image).

I’ve also attached what appears to be a related oddity:

If you pass nil to createImage as the colorSpace there is a conversion from srgb to linear shown in the CI_PRINT_TREE program output (see “NIL – 49254_1_2_program_graph.pdf”) before compositing, but there is no conversion from linear to srgb after compositing. This end result appears to be correct in data and viewing terms.

The implication here is that the image data is left in linear rgb, but the image returned declares it is in Device RGB, or that the conversion to linear rgb did not have any effect because we are compositing over white with only black and an alpha channel.

If you however pass linear RGB as the output colorSpace to CIContext.createImage, you would expect to receive the same image, in Linear RGB. However the result is incorrect again (as per the grey curve changing) and still says it is Device RGB.

What’s more you can see in the program PDF accompanying this (“LINEAR SRGB 49567_1_2_program_graph.pdf”), that the Core Image program shows identical contents to the version where “nil” is passed, and yet the output is very different.

Going one further and passing sRGB as the colorSpace to createImage, the result is again wrong, in the same way as the linear attempt, but the graph has an extra un-premul -> linear_to_srgb -> premul phase after the compositing, but returns an sRGB image albeit with the wrong results. See “SRGB 49484_1_2_program_graph.pdf”

It’s extremely befuddling and I have no clear path yet how to work around this problem while maintaining colour management.

LINEAR SRGB 49567_1_2_program_graph.pdf