I’ll be honest, at first I felt there were not many exciting things at this WWDC. Personally I am very happy to see the new notifications improvements and Siri shortcuts, and I can verify after running iOS 12 beta on an iPhone 6 that the performance improvements are very real.

However, on deeper investigation in terms of Flint framework there were some definite points of interest that affect us and some new things we can do in the future when these final OS releases come out, so that we get even more out of our code. If you don’t know what it is, Flint is a small Swift framework that helps you modularise your code around Features and Actions, removing huge amounts of boilerplate and complexity for you when dealing with many common tasks on Apple platforms such as permission checking, publishing NSUserActivity instances, URL mapping, feature flagging and in-app purchases.

I love making things easier for developers and I love apps with deeper integrations into the operating system, and it turns out this is actually a pretty huge WWDC for that.

Flint puts developers in a unique position because your code is already factored out into actions and features, so when new technologies come along based around user activity we can typically integrate them with few, if any changes to our code. Flint knows whenever high level actions are performed, giving it a unique vantage point in your app.

🤖 Siri Shortcuts

Siri Shortcuts appear to be an umbrella term for a bunch of related but different APIs and capabilities. I’ll tease these out as far as I am able to at this time. Spoiler: this is fantastic stuff!

Apps can now add custom actions that Siri can trigger, and we can integrate actions into user defined workflows, search, the Apple Watch Siri watch face and HomePod.

🔮 NSUserActivity eligibility for predictions

It is not yet clear what exactly is meant by it as there is no documentation, but there is a new NSUserActivity.isEligibleForPrediction property coming in iOS 12 (see it mentioned here and also in the Platforms State of the Union session).

We’ve actually already added support for this to Flint automatic Activities handling on the wwdc2018 branch, but we need to do testing before we can merge it. It should then just be a case of setting static let activityTypes = [.prediction] to enable this for any actions you wish.

This will permit Siri to use the activity in suggestions it provides in “Siri Suggestions” as well as other places in the system. The mystery here is that I thought that was what Siri Pro-active was doing for the last 2-3 years with normal NSUserActivity. I believe that the issue with plain NSUserActivity in this regard is that there is just too much noise from all the activities without any real discernible utility to them. By being more explicit about our intentions, it seems this will help Siri learn more effectively.

🗺 Shortcut templates for workflows and related suggestions

There’s a bit to unpack here and more details forthcoming in sessions, but it seems that your apps can give Siri information about custom intents (actions) that can be performed in your app, for use in workflows and voice activation. This sounds like a perfect fit for Flint!

In essence the API allows you to describe actions and the parameterised portions of them, so that these can later be used by the user in the new Shortcuts app (formerly known as Workflow!) and with Siri to save predefined workflows that you can trigger through Siri suggestions on screen or by voice. In the latter case you let the user record their own trigger phrase for the shortcut.

From Flint’s perspective we’ll have to see what we can do here. Hopefully we can add new conventions to the Action protocol much like the custom NSUserActivity handling overrides so that you can describe the Siri Shortcuts-facing parameters of your action and how to marshal to and from that when the user triggers your shortcut or embeds it in a workflow. I’ll have to see the sessions on this during the week to get a clearer idea.

One thing is for sure: the shortcuts are defined using a configuration file rather than at runtime, so we won’t be able to automate that part.

We may however be able to add something like donateToSiri(…) on actions, so you can do things like this:

/// The user has just ordered some food

let orderParams = FoodOrderParams(restaurant: "Ottolenghi")

FoodOrderFeature.placeOrder.donateToSiri(with: orderParams)

Let’s see what is possible when the sessions have aired.

⌚️ Declaring “Relevant shortcuts”

A new feature, or perhaps an enhancement of what was; you can now register shortcuts and give clues to their relevancy. My understanding was that Siri Pro-active would do this in the past automatically — sense what you do at different times and places and suggest them automatically. It seems that the Siri watch face on Apple Watch will use this new and more explicit information to surface relevant things to you.

The new APIs around INRelevantShortcut allow you to explicitly say “Here’s a shortcut to do X and it makes sense in the evenings at home” or “This shortcut makes sense when you are near this map region”.

Once we understand more we can be more specific but it seems like it will be possible to add something to Flint along the lines of:

FoodOrderFeature.placeOrder.addRelevantShortcut(

when: .evening,

where: .atHome)

💡 Registering shortcut intents

There’s another way to register shortcuts for Siri to use, and that is to register custom intents. It’s not clear what the advantages are here but it appears that if you use this technique and provide a “Custom Intent” extension, your app’s shortcuts will be available to Siri from other devices like Apple Watch and HomePod.

e.g. with this approach you could say “When is my food getting here?” to your HomePod and it will use the extension on your phone to return information about the delivery ETA.

We haven’t explored Siri Intents in Flint at all to date because the Intent domains were so limited, and it is an area we need to look at soon to see if we can use the Action paradigms there. We’ll do this before the final 1.0 release. We already have Flint.continueActivity for URL mapped Actions so if you declare intents using these, and have extensions that can perform the actions you should be good to go already. YMMV right now though!

It could be we can add a registerIntent function to actions to support this mechanism explicitly in future, reusing your existing actions for this.

🧟♀️ macOS running apps built from iOS-compatible UIKit code in future

This announcement is interesting because it supports one of the underlying themes of Flint: to make building cross platform code easy.

The approach appears to be base around easier building from common source. For Flint apps Feature and Action declarations should ideally work on all platforms you support. This is why Flint feature constraints don’t use SDK-specific types; you’ll always be able to compile all your feature definitions on all platforms, even if some permissions are not even applicable to a platform version (and hence the feature isn’t).

This means your logic code doesn’t need to worry about feature definitions being missing on some platforms. They’re there but simply disabled because their platform constraints are not met.

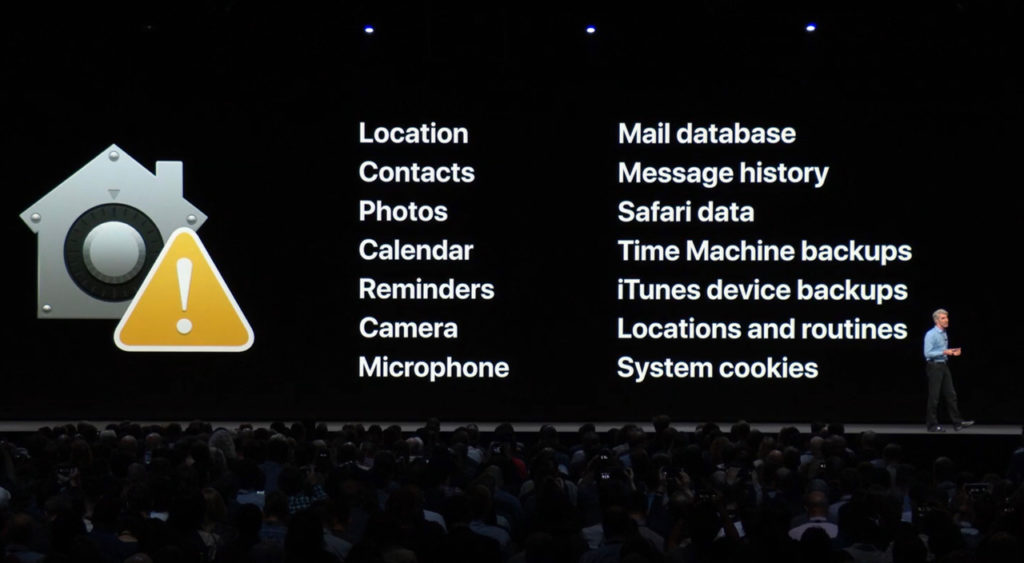

🔐 New permission authorisations on macOS

One other significant change is the introduction of camera and microphone access authorisation coming to macOS Mojave (10.14), much like on iOS. Flint will have this covered very soon, using the same .camera and .microphone permissions you use on other platforms.

It seems likely Apple will continue adding these permissions as necessary as they unify the APIs that are common to macOS and iOS in the coming iOS-app-hosting-on-macOS transition.

⤴️ New macOS Quick Actions in Finder

The new side bar view in Finder that shows Quick Actions relevant to the files you have selected may be something that Flint can integrate with.

More details are needed, but it may be we can “export” your actions like we do NSUserActivity such that you can simply declare an Action as a system Quick Action. We’ll see!

🚏 os_signpost logging and Instruments

There is a new API for helping to debug performance issues in Instruments, related to the OSLog APIs that already exist. It ties together logging and markers of where your key processing begins and ends, with visibility of these markets in your Instruments timeline relative to other data you are capturing.

As of last week, Flint already supports os_log as a logging target out of the box for all your contextual logging, giving you rich high-performance logging in Console.app. In the light of this new API we may want to add a new ActionDispatchObserver and a convention so that your actions can easily indicate if they should take part in sign posting so that you can debug their throughput with Instruments.

I would be relatively easy to add a new observer for this, but we’ll need to see what this is like in action to work out what is truly useful. We could of course automatically add it for all actions, but most actions will not do anything too time consuming, but they may spawn work that is… so perhaps we can do something there automatically.

⚠️ App Store review crackdown on Usage Descriptions

Be warned! One of the first things mentioned in the Platforms State of the Union talk, is a change to App Store review policy where they are now planning to crack down on vague or unhelpful privacy usage description keys in your Info.plist.

While Flint cannot write these for you, we do have a mechanism in place to verify that you have specified the required keys for all the permissions you use in your app. You’ll see these warnings at startup in development where Flint sees you have declared features that require permissions for which you have no usage description yet.

This saves you from surprising assertions during QA testing where somebody hits a feature code path that you didn’t test fully, for which there is a permission required that has a missing usage description in your Info.plist.

That’s all for now

I’ve got a lot of WWDC sessions to watch! Exciting times.